Continuous GitOps: Using Renovate for Helm Version Management in ArgoCD

Parts:

- Getting images from ECR (Regcred)

- Getting Helm chart from ECR (AWS CLI Cronjob)

- Renovate checking for updates from ECR (Don't need to cover)

- Example GitOps repo

Notes:

For the Argo Repo updater, note that we can do this with OIDC stuff if we set up some infrastructure level authentication provider like Authelia and then connected that to AWS with OIDC, but we will be using a CronJob and aws-cli image

Introduction:

This is part 3 of a series outlining streamlining continuous delivery of microservices, you can find the links to the other parts here:

In the previous parts, we streamlined the process of building and storing images for our microservices in ECR using GitHub Actions, as well as automating the updating of Helm chart dependencies and creation and storing of Helm charts in ECR.

In this part, we will be going over the final part utilising the resources we created in the first 2 parts, we will be:

- Setting up and automating a secret to read container images from ECR

- Setting up and automating a secret to read Helm charts from ECR

- Setting up a GitOps repo for ArgoCD to utilise our previously created Helm chart

Use case:

We want to be able to declaratively track and deploy our Helm Chart applications and infrastructure choices with ArgoCD, as well as have automated updates.

The tech involved:

An assumption:

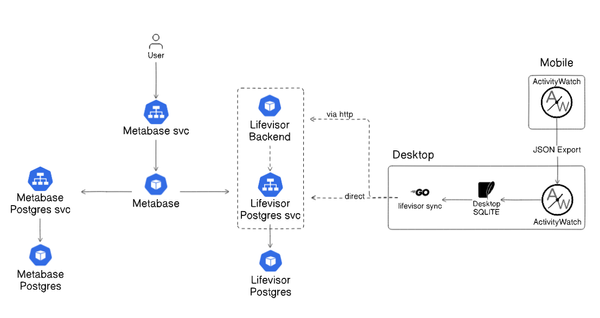

In the graph outlining the full system, there is an icon denoting the use of Renovate, the setup for renovate for this GitOps repo is the same as in the previous part, to save time I wont be writing about that here, if you want to learn more about that check out Part 2.

GitOps Repo:

GitOps is an operational framework based on DevOps practices like CI/CD and version control, its main utility is the automation of infrastructure and software development.

ArgoCD a declarative, GitOps continuous delivery tool for Kubernetes, in short, ArgoCD reads files from a Git repo and can create various types of resources automatically from the files withing the Git repo, and can automatically update those resources based on changes within the upstream Git repo.

So what will be in our GitOps repo?

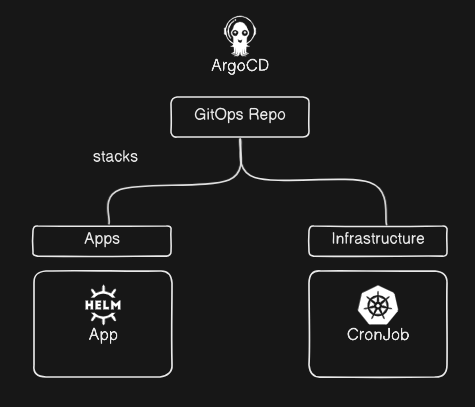

When I write ArgoCD repos, I use a folder called stacks which inside of each is a definition for a set of applications to be deployed in ArgoCD, so when we set ArgoCD to sync from our repo, it will create one resource that manages the sets of applications, and in each of those application sets or 'stacks', are the applications which we defined.

An example of how this appears in the ArgoCD UI is below:

Check out the example repo here: repo

Authenticating Kubernetes to ECR

To deploy our images that we stored in ECR in the first part to our, we need to create a Secret in our Kubernetes, which will be used within our Pod definitions in the imagePullSecrets section.

For this authentication we will need to get our AWS ECR login password (a token which expires after 12 hours) like so:

aws ecr get-login-password --region eu-central-1We can then export this as a token and use it to create a Secret:

TOKEN = `aws ecr get-login-password --region eu-central-1 | cut -d' ' -f6

kubectl create secret docker-registry regcred --docker-server=[OUR ECR URL]/[ECR REPO] --docker-username=AWS --docker-password=$TOKENThe above will create a docker-registry secret, which is used to authenticate with a Docker registry or a registry with an equivalent API, which in our case is ECR.

We can then use this in our Pod definitions, either in YAML that is directly deployed to the Kube cluster, or referenced in a Helm Chart:

apiVersion: v

kind: Pod

metadata:

name: app-from-private-ecr

spec:

containers:

- name: my-app

image: [OUR ECR URL]/[ECR REPO]

imagePullSecrets:

- name: regcredUpdating the token

As mentioned earlier, the AWS ECR token is only valid for 12 hours, therefore we need to come up with a method for updating this token, there are 2 good methods available to us:

- Cronjob running on a node with

kubectl. - CronJob in our cluster. (Recommended)

I wont cover these here, but I will provide links to blog posts outlining each method.

Once this is complete, we have an automatically updating token which allows us to pull our application images from the private ECR repository.

Authenticating ArgoCD to ECR

Now that we can pull the individual application images, we have 1 step left before we can deploy our Helm charts, we must follow similar steps as the above and authenticate our ArgoCD cluster to our ECR Helm repo.

- We will start the same way we did for the Kubernetes authentication, we will generate our ECR login token:

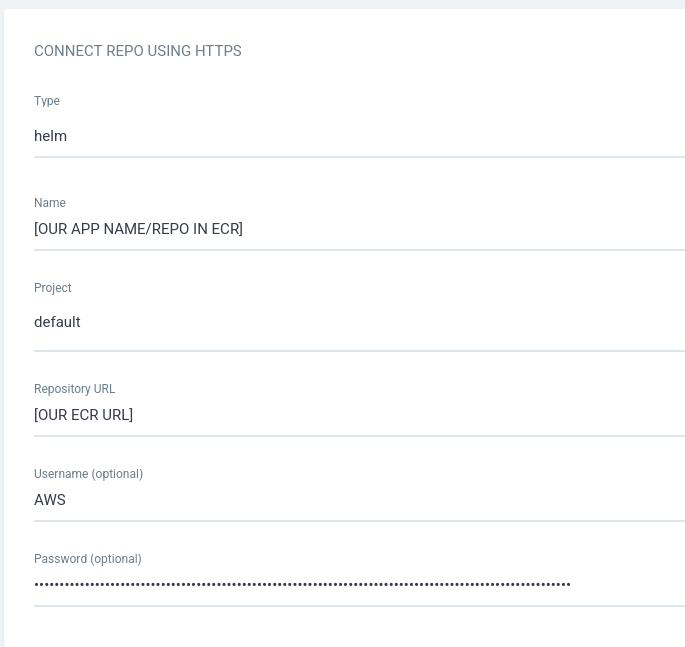

aws ecr get-login-password --region eu-central-1- Then we will go to our ArgoCD instance, and create a new Helm repository:

Where the password above is our token which we generated in step 1.

Make sure to also check Enable OCI, this is at the bottom of the Repo page.

This can also be done with the argocd CLI with argocd repo add REPOURL [flags]

You can read more about creating repos with the CLI here:

Once the repo is created, and available in our repository list, our ArgoCD instance will be able to use our Helm charts which we pushed to ECR in the previous part.

However, we have the same issue as before, our token is only valid for 12 hours, once that lapses, we wont be able to read the Helm charts from our ECR, so we will need to again automate the updating of our repository secret.

ArgoCD stores the repository secrets as a Secret in the namespace which it was deployed, which is usually argocd. The secret will have a name like repo-xxxxxxxxxx.

Within the repo- secret, there is a field for the password, this is our token.

So this leaves us with a few methods for keeping this token up to date:

- Cronjob on a node with

kubectl - Cronjob running in our cluster (Recommended)

Again, I will recommend the Cronjob running within our cluster, you can reuse the Cronjob definition from method 2, here's an example:

containers:

- command:

- /bin/sh

- -c

- |-

TOKEN=$(aws ecr get-login-password --region ${REGION})

kubectl patch secret repo-xxxxxxxx -n <namespace> \

-p '{"data": {"password": "'$(echo -n $TOKEN | base64)'"}}'

env:

- name: AWS_SECRET_ACCESS_KEY

value: <AWS_SECRET_ACCESS_KEY>

- name: AWS_ACCESS_KEY_ID

value: <AWS_ACCESS_KEY_ID>

- name: REGION

value: <REGION>

image: gtsopour/awscli-kubectl:latest

imagePullPolicy: IfNotPresent

name: ecr-token-helperModifying the Cronjob definition from Method 2 to use the Job template from above will create a Pod every 6 hours, that will get a new ECR login token, and then patch our existing ArgoCD repo password, providing us consistent access to our Helm charts in our private ECR repository.

What else can we do?

The above is a fairly bare bones definition of setting up ArgoCD to deploy our application automatically to our cluster, so what else could we do here?

- Multiple Cluster Support: Create deployments across multiple clusters (e.g., dev, staging, prod) to simplify workflows.

- Automated Rollbacks and Health Checks: Configure automatic rollbacks for failed deployments with health checks.

- Progressive Rollouts with Notifications: Implement progressive rollouts like canary or blue-green deployments and integrate these rollouts with Slack or email notifications to alert the team about deployment stages.

And those are only a few of the possible improvement suggestions for our ArgoCD deployment strategy.

Wrapping it up

With the deployment and setup of this final step, we have put the final piece in place for a robust continuous deployment pipeline, this method empowers developers to quickly make changes, create a new release, and have that release flow through the pipeline, being approved when necessary, with the release ending up with ArgoCD automatically deploying that application to a Kubernetes cluster and ultimately making that available to users.

Along with that, that puts the final piece in place for this blog series following a modern and robust continuous deployment pipeline from release to delivery.

Don't forget to subscribe for more tips and tutorials on streamlining your continuous delivery processes.

Thanks for reading!