How I host my homelab with GitOps

Background

Sometime in August 2022, I came across this post on reddit by Khue Doan, and was so inspired that I decided that I would overhaul my old server and replace it with my own Kubernetes cluster.

The Hardware

- 3x HP ProDesk 600 G3 DM Mini i5-7500T with 16gb RAM and 250gb NVME

- 1x Synology ds220j with 2x 4TB HDDs

- 1x TP-LINK 8-Port 10/100/1000Mbps Desktop Switch TL-SG108 V4

The Source

- My homelab repo: https://github.com/azaurus1/homelab

My setup

While Khue's version of his homelab focuses more on the ability to build up and tear down the entire system from the hard drive level, I have only focused so far on the application layer

Each node runs Ubuntu lite and microk8s for the Kubernetes implementation along with running an NFS provisioner on the cluster which points to the Synology ds220j NAS, which allows me to have all the filesystems for the applications in the NAS and replicated in RAID in case of any disk failures.

For the application layer, ArgoCD is used for Continuous Deployment, ArgoCD will watch my homelab repo on Github and will sync any changes in the repo to the application layer automatically.

Applications

At the time of writing this, I run 36 pods from 21 separate k8s deployments, all managed through ArgoCD, of the apps that make up those pods, they can be broken down into the following groups:

- Media

- Monitoring

- Communication

- Self-hosted Cloud

Media

My media app stack is:

- Jellyfin

- Sonarr

- Radarr

- Jackett

- NZBget

- qBittorrent

With these apps, I can watch and collate all my legitimately obtained, and personal media, like dvd-ripped tv shows and movies.

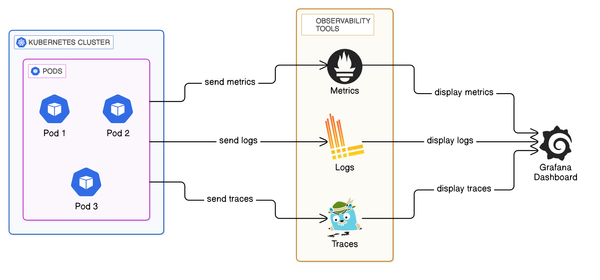

Monitoring

To keep on top of running out of space or memory, I run the following monitoring applications:

- Prometheus

- Kube node exporters

- Grafana

- exportarr sidecar for Sonarr and Radarr

- Gotify

- Uptime-Kuma

I use a grafana dashboard to have an overview of the current state of the drive space on the NAS, and a seperate dashboard to monitor the current state of all the k8s nodes.

Uptime-kuma polls all the applications running and exposed on *.azaurus.dev, and will send notifications via Gotify to my phone and desktop whenever the service is either unreachable or if there are any other issues.

The helm charts which I use for the majority of my applications are from the now deprecated k8s-at-home archive, the charts for Sonarr and Radarr in this archive has a built-in exportarr sidecar which exposes application data on a port to allow prometheus to pull it into its time-series database.

Communication

I run a Matrix chat server, Matrix is a great open protocol for decentralised and secure communications, which I now use to have my multiple chat applications in one chat server, so now my Facebook messenger and Instagram chats are bridged to my Matrix server. Aswell as having multiple bots for RSS feeds for different topics.

Matrix has many client applications which you can use to communicate with the server, I use Element chat which seems to be one of the most polished chat clients but theres many to choose from. I also host my Element chat on kubernetes so that its always reachable as long as the server is reachable.

If you have matrix, I'm reachable at @liam:matrix.azaurus.dev.

Self-hosted Cloud

For the final application group, I host a Nextcloud instance, Nextcloud is a great option for reducing your own reliance on Google or any other cloud provider.

Nextcloud has alot of optional downloadable apps which can replace the functionalities you would be used to with Google. I use the Calendar and Tasks apps to sync across all my devices, Nextcloud office to replace Google docs and sheets, Nextcloud photos to replace Google photos.

Not to mention, many apps also provide a native option to use a Nextcloud instance as its Cloud connection, including Apps like Joplin or even Operating Systems like Ubuntu allow Nextcloud as an option.

Hosting

Finally, putting it all together, it wouldn't be much use if I'm not able to reach any of these services over the internet.

Currently I run 3 cloudflared pods with anti-affinity meaning that the pods are running on their own exclusive nodes in the cluster, this is a redundancy measure in case of any issues in up to two of the nodes, the services will still be reachable.

Cloudflared is the Cloudflare tunnel client, this allows you to tunnel your kubernetes services to your domain using Cloudflare's Zero trust tunnels. Read more about Cloudflare tunnel here: link

Wrapping it up

All in all, self-hosting your everyday services might seem like a daunting task and it will definitely require a lot of trial and error for you to set it up, but the feeling of accomplishment in being more self-reliant along with everything that you will learn from the journey to getting it to work is worth it.